Navigationstitel

Reihenfolge von Text/Bild

How a network of plants and computers could warn us about air pollution - and how robots could adapt to their human counterparts to make cooperation between humans and machines more pleasant. Robotics expert Heiko Hamann in an interview on two current projects of his research group: WatchPlant and ChronoPilot.

Mr Hamann, you have been professor of cyber-physical systems at the University of Konstanz since winter semester 2022/23. What exactly does this field involve?

Cyber-physical systems are systems in which physical components – such as robots, sensor nodes or other machines – are networked with digital systems and cyberspace to complete tasks together. The individual components of these systems are usually distributed across several locations and communicate via wireless technologies. In WatchPlant, the project we will also talk about, we are developing just such a system. However, my main research area is actually swarm robotics. That is one of the reasons why I applied for the position here in Konstanz. With the Cluster of Excellence "Centre for the Advanced Study of Collective Behaviour", the university offers an ideal environment for our kind of research.

Speaking of WatchPlant: What is the project about?

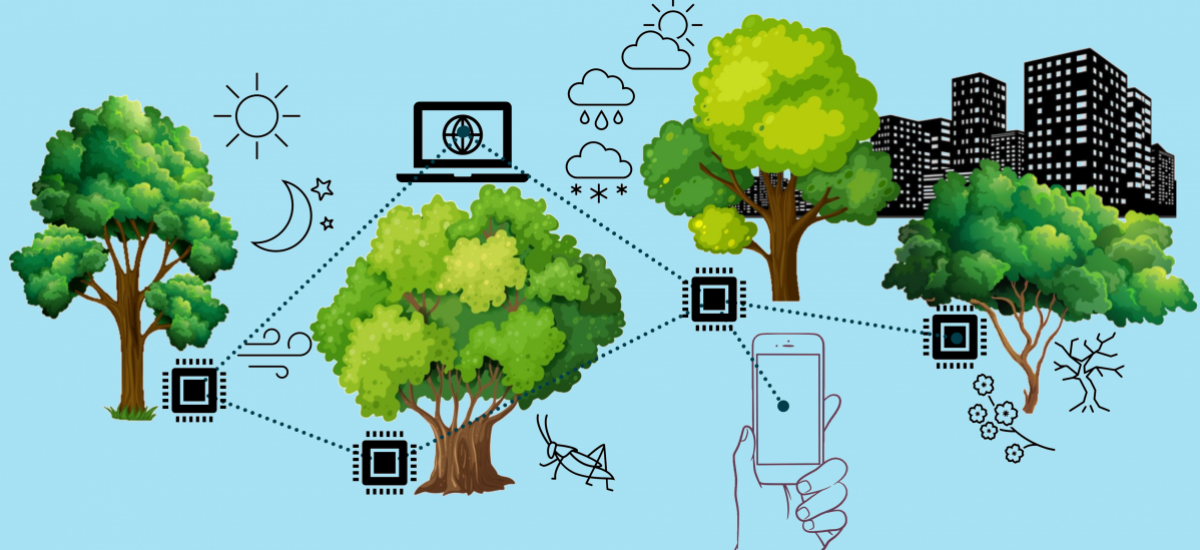

Our vision is to use what is known as phyto-sensing to monitor the air quality in cities. Of course, pollutant loads in the air are already being recorded in many cities. The innovation in our project is that we want to utilize plants, which will make it possible to closely and cost-effectively monitor large areas. The basic idea, in short, is the following: We share the air we breathe with countless plants. And just as air quality has an impact on our metabolism and health, high levels of particulate matter or ozone, for example, have an impact on the plants' physiology. We study and record this impact in order to draw conclusions about the state of the environment.

WatchPlant intends to use a network of smart plants and sensor nodes to monitor air quality in cities. Users in cities could then receive warnings about locally elevated pollutant levels via app, for example. © AG Hamann

So the plants are a kind of living measuring station?

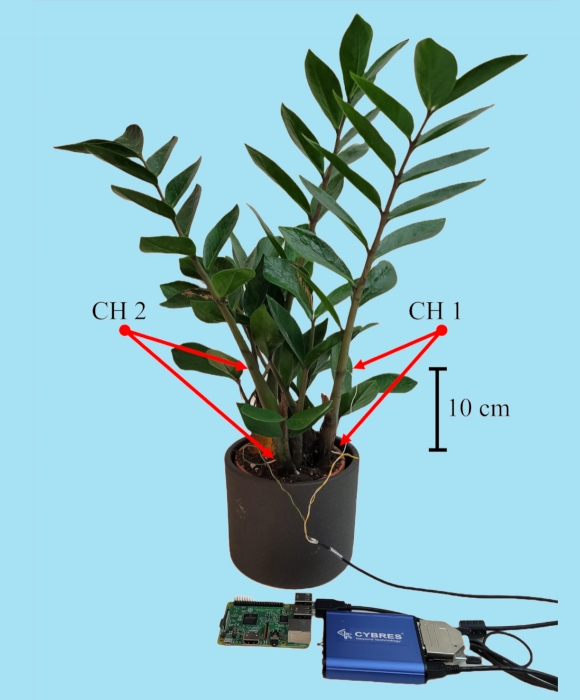

Exactly. The challenge is to read out the relevant data from the plant. Our WatchPlant team develops the necessary methods. In my lab, for example, we use electrophysiological methods. That is, we measure the electrical voltage between two sections of the plant and study how it changes over time and under changing environmental conditions. This is very similar to what a doctor does during a long-term ECG to draw conclusions about the cardiovascular system. In addition to that, our collaboration partners, who are also involved in WatchPlant, are developing methods for obtaining information on the condition of the plant from plant sap. Our aim is to use both data sources in combination.

Plants are equipped with measuring electrodes, attached 10 cm apart on the plant's stem. The ZZ plant (Zamioculas zamiifolia) in the image is read out via two measuring channels (CH1 and CH2). © AG Hamann

And how can you use these data to draw conclusions about air quality and other conditions at the plant's location?

The electrophysiological data that we record in our lab already contain a lot of information. Almost always when something changes in the plant's environment, the electrical signal we measure changes, too. The difficult part is the interpretation of these data, because no comparison data has been published in plant physiological research so far. As computer scientists, we deliberately decided to use a black box method supported by artificial intelligence (AI) to classify the measured signals. Specifically, we record the electrical signal of the plant under controlled conditions: for example, at different ozone concentrations in the air or under different lighting conditions. Then we digitally tag this data and use it to train an artificial neural network. This way the AI system learns to independently recognize certain environmental events in the data stream as soon as they occur

In the Hamann lab, electrophysiological measurement data are collected in a GrowBox under controlled environmental conditions (in the example: specific illumination) in order to train an AI system. © AG Hamann

What do you do with this information?

We will use the data on different time scales. After all, the project goal is to build an entire network of many of these plant monitoring stations that continuously provides data. Since the AI-based analysis of data is relatively fast, such a network could provide near real-time information about air quality or similar environmental data from the locations of individual plants. This could be used, for example, to issue automated warnings via a mobile phone app when local pollution levels are too high. Even local residents might support the project by equipping their own garden plants with the necessary sensors and turning them into measuring stations.

Along with botanists and sensor and network technicians, we work with modelers, specialized mathematicians who look at the data over longer periods. Our goal is to relate these data to other information from the same time period – for example, population health data. In this way, correlations between environmental data and other relevant measured values could be detected and quantified, which in turn could provide decision-makers at various levels with a basis for fact-based decision-making.

Navigationstitel

Reihenfolge von Text/Bild

Let's talk about your second EU project, ChronoPilot. In this project, too, you use AI to interpret physiological data – but in this case, data from humans.

That's right. Apart from that, however, the projects are completely different. Roughly speaking, ChronoPilot is about making the collaboration between humans and robots more enjoyable for humans. You could say that we are currently in the midst of a new industrial revolution, whose significance is perhaps comparable to the introduction of mass production and assembly line work at the end of the 19th century, or to the increasing use of electronics and IT in production in the second half of the 20th century. This time, it is primarily cyber-physical systems or the combination of various technologies into "smart factories" that strongly drive automation.

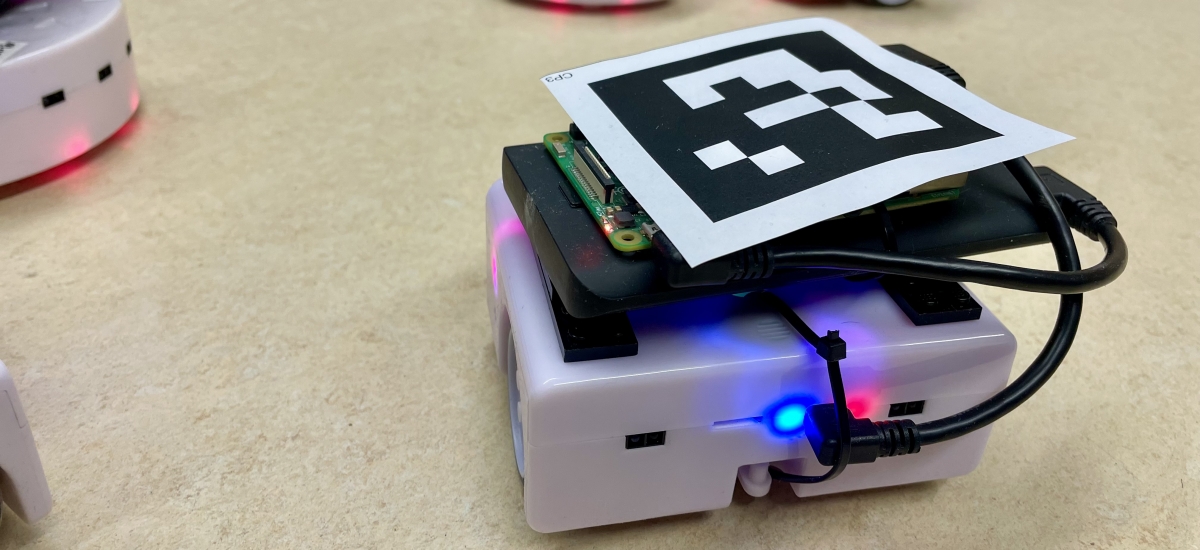

Just as with previous industrial revolutions, many people are looking anxiously at these new developments, and some of the fears people have today may be very similar to those of 150 years ago. One of the central questions is whether we humans can still perform our everyday work and find it fulfilling when tireless robots and other machines seem to set the pace. This is where ChronoPilot comes in. The machines – in our case robots – are to adapt their operation and, for example, reduce the working speed on their own if their human counterpart is stressed. So ultimately, humans set the pace. To do this, however, the robot must first interpret the emotional state of its human counterpart – which is where AI comes into play again.

Heiko Hamann's research group works with various robot models. In the ChronoPilot project, these adapted Thymio II robots are used. © AG Hamann

So the machine recognizes how I am feeling in the current situation at work, and adapts the workflow accordingly, if necessary?

That's how you could describe the basic principle, yes. However, it is not just a matter of reducing stress for workers, for example, by reducing the pace of work. The ChronoPilot project additionally aims to positively influence people's subjective perception of time using suitable stimulation. You probably have experienced it yourself: If you are working on an unpleasant or monotonous task, time seems to pass at a snail's pace. Enjoyable or exciting experiences, on the other hand, literally fly by. We want to utilize this subjective perception of time. When working with robots, the workers should enter a state of immersion or flow in which they feel comfortable and in which time passes quickly as a result. That's what we are researching in my lab as part of the project.

The perception of time can be changed and depends on our mental resources, among other things. © Generated with Stable Diffusion on starryai.com and post-processed; University of Konstanz

Can you give an example of how that can be achieved?

Human perception of time can be altered by a variety of sensory impressions and stimuli. We know this from psychological research. These stimuli range from flashes of light, to vibrations on the skin, music and movements. They can be generated in a targeted manner using headphones or augmented reality glasses, for example. For the haptic stimuli, our project partners use a vest equipped with vibration motors. In my lab, we are investigating how time perception can be influenced by the changing motion of groups of robots. In an initial study, we were already able to show that an interaction task in which a human controls a group of small robots passes more quickly for the human if many robots move comparatively quickly. Thus, movement speed and the number of robots are two possible parameters to impact time perception.

Navigationstitel

Reihenfolge von Text/Bild

Do you see other areas of application besides the world of work?

Automation is rapidly increasing in almost all areas of life – including private life. Think, for example, of driver assistance systems and autonomous vehicles. Here, too, our perception of time can play a role and even become dangerous if the person behind the wheel is under-challenged or distracted. Such dangers could be reduced in the future with the methods we are developing with our project partners in ChronoPilot. In general, we follow a philosophy in ChronoPilot and our other projects that clearly focuses on people and their environment. With our research, we would like to reduce the uneasiness that some people have about automation.

The interview was conducted by Daniel Schmidtke.

© Cover photo: Dr. S. Kernbach, CYBRES GmbH

- 1About intelligent plants, robots and the subjectivity of time

- 2Professor Heiko Hamann

- 3Research field

- 4What is the WatchPlant project about?

- 5WatchPlant – Graphic

- 6Plants – a kind of living measuring station

- 7Plants are equipped with measuring electrodes

- 8Classifcation of measured signals

- 9GrowBox

- 10Building an entire network

- 11Video: WatchPlant Project

- 12ChronoPilot Project

- 13Robot models

- 14Working with robots

- 15Perception of time

- 16Influence time perception

- 17Video: Subjective Time Perception

- 18Other areas of application

- 19Genereal information about the interview